People often have a habit of freezing celebrities in their minds when they were at the peak of their fame, forgetting that they are human beings who age like everyone else. In addition, the widespread use of cosmetic surgery and fillers today further distorts our perception of how people naturally look as they age. However, fortunately, there are still some celebrities who remind us that there is absolutely nothing wrong with looking different as we age compared to our younger years.

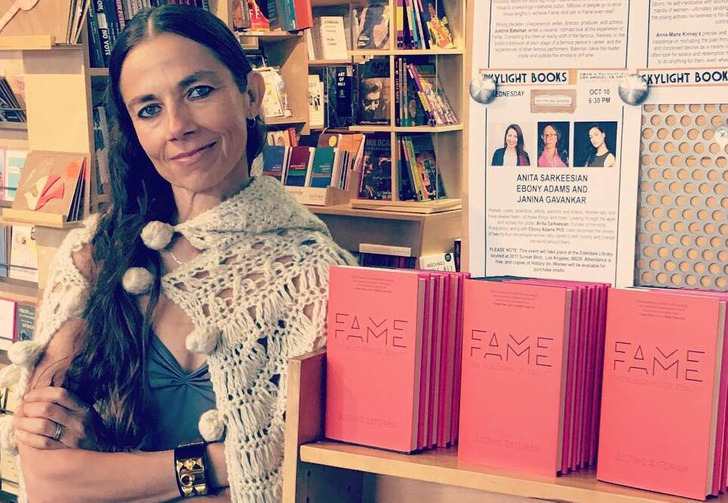

Justine proudly embraces her age.

If you were a kid or teenager in the ’80s and ’90s, you probably remember Justine as Mallory Keaton from the popular TV show Family Ties. But after that, she shifted her focus from acting to working behind the scenes. Now, she’s a successful author and director. In a recent interview, the actress, now 57 years old, came back into the spotlight to share an important message with all women concerned about getting older.

When she reached her 40s, people considered her “old.”

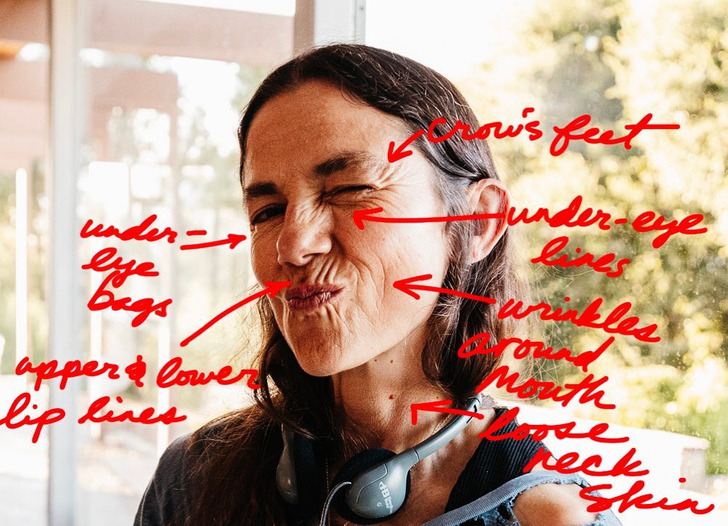

Justine didn’t think about getting older until she had to search for something online. She wanted to research and refresh her memory about something that happened during her fame. But when she typed her name, Justine Bateman, into Google, the search autocomplete suggested: “looks old.” This happened when she was only around 40 years old.

That revelation actually boosted her self-confidence.

When questioned whether she had ever considered cosmetic procedures, Justine explained that plastic surgery would cause her to “lose all of her authority.” She expressed contentment with her current appearance and emphasized that she finds satisfaction in the visible signs that indicate she has evolved into a different individual from her younger self.

She even decided to write a book about her experiences.

Justine doesn’t criticize those who choose to undergo beauty treatments to appear younger, but she does express a feeling of sadness for them. She explains that she feels sorry for those so preoccupied with the idea of fixing their appearance that it distracts them from focusing on the meaningful aspects of life. In 2021, Justine Bateman released a book titled “Face: One Square Foot of Skin,” which addresses this significant issue.

Women shouldn’t spend too much time fixating on their looks.

Justine shared some exciting news: “There’s absolutely nothing wrong with your face!” she wrote as the caption for an Instagram post promoting her book. Justine draws from her experiences to illustrate society’s obsession with how women’s faces transform as they age. When asked about the beauty of aging, Justine firmly states that she doesn’t care about others’ opinions. She confidently asserts, “I think I look rad. My face represents who I am. I like it, and that’s basically the end of the road.”

Several notable figures in the public eye have made the conscious choice to embrace natural aging, rejecting the pressure to undergo cosmetic interventions. One such individual is Cameron Diaz, who boldly opted to age gracefully without relying on Botox or similar treatments. After an unpleasant experience where Botox altered her appearance in an unexpected manner, Diaz decided to embrace her natural features and allow the aging process to unfold authentically.

Preview photo credit Invision / Invision / East News, Invision / Invision / East News

During the live performance of Journey’s “Don’t Stop Believin’” in 1981, Steve Perry’s vocals were truly phenomenal

In 1981, Steve Perry of Journey delivered a live performance of “Don’t Stop Believin’” that cemented his reputation as one of the greatest singers in history. Earlier that year, the song had become a worldwide sensation. During a 1981 concert in Houston, Texas, the band showcased their exceptional talent on this now iconic track.

Perry’s vocal delivery on this song is strikingly smooth and almost ethereal, capturing the magnetic presence of a rock star that electrifies the audience. Observers often comment that Perry’s live performance surpasses his studio recordings in its raw intensity and finesse. For an even better experience, you can watch an HD remaster of Journey’s 1981 Houston performance of “Don’t Stop Believin’” on their official YouTube channel.

With 274 million views, this live performance is one of Journey’s most popular videos, ranking third overall on their YouTube channel and number one among their live recordings. The footage comes from their Escape Tour, which supported their seventh studio album, Escape.

Journey played two shows in Houston on November 5 and 6, 1981, but it remains unclear which night “Don’t Stop Believin’” was recorded. The band was clearly in top form during the Escape tour, as evidenced by the popularity of “Who’s Crying Now”, which was also recorded in Houston and was the second most viewed live performance on their YouTube channel.

Fans praised the performance with comments like “No auto-tune, no backing tracks, just exceptional musicianship” and “Steve Perry sings like he’s effortlessly passing a test without studying”. The reaction underscores the awe and admiration for Journey’s live rendition of “Don’t Stop Believin’” in Houston.

The song reached top ten status both in the US and internationally, eventually becoming Journey’s most consistent hit, with 18 platinum awards in the US.

Leave a Reply